Mid-semester program reviews are a standard protocol at most colleges and universities. If you’re a program administrator, you have an important role in overseeing and interpreting these reviews — but a program review’s value comes down to the metrics it gauges. A well-crafted program review can provide valuable insights to help you promote student and staff success at your institution. This guide will highlight the key higher ed mid-semester metrics to include in your program review to draw out the most constructive feedback from your students.

Why program reviews matter

For program administrators, mid-semester and mid-year reviews are a powerful tool for continuous program improvement. To develop an effective program review, it’s important to understand what you want it to achieve. The well-crafted review can bring several benefits to your program, including:

- Guiding curriculum and course development: A mid-semester program review can help you identify improvement areas for curriculum content, assessments, learning materials, tools, and support resources.

- Improving student outcomes: A program review can highlight what aspects of the program students find most helpful, as well as anything they feel is obstructing their learning. At the mid-semester point, this information can be pivotal for preventing disengagement and improving year-end outcomes.

- Enhancing faculty performance: Even the most growth-minded lecturers have their blind spots. Constructive student feedback can help faculty recognize opportunities to enhance their teaching effectiveness, promoting employee development. You can also incorporate program review data on teaching effectiveness into your mid-year performance reviews for educators.

- Supporting goal-setting: Mid-semester and mid-year review data can help you identify relevant key performance indicators (KPIs) to track throughout the following review period and set actionable goals in those areas. Each review is an opportunity to check progress toward current KPIs and set new ones.

- Aiding accreditation: Program reviews can contribute to your reporting requirements for initial and maintained accreditation. These reviews demonstrate your commitment to continuous improvement and serve as evidence of student engagement and faculty performance.

- Cultivating accountability and empathy: Mid-semester, mid-year, and annual reviews contribute to a healthy culture of accountability and empathy in your faculty. On the one hand, teachers know their students are holding them accountable to your institution’s high standards. On the other, students know your faculty hears and values their voices when evaluating programs.

15 higher ed mid-semester program review metrics to track

The value you derive from program reviews is the product of four factors:

- Relevance of the parameters the review evaluates

- Clarity of the questions you use to elicit responses about those parameters

- Insights you gain by collecting, organizing, and interpreting review data

- Effectiveness of the measures you implement to improve the program based on those insights

Each factor depends on the one before it. The positive impact of your mid-semester program reviews rests on the metrics you evaluate, which determine the questions that give you the data you act on.

The following are 15 of the most important program review metrics to track. You could create a comprehensive program review to cover all these metrics or choose a few you find most relevant to your priorities. If you opt for the comprehensive approach, consider formulating just one question for each metric to avoid overwhelming your students. If you narrow the metrics down, you could include two questions for each metric, using different framings to ensure understanding and maximize data quality.

1. Material relevance

One of the greatest challenges for almost any program curriculum is distilling the content to give students the most value within the available time. Every section of learning material and every assessment must be relevant to the program’s overall outcomes, which in turn must prepare students for their working lives. Students will be more engaged when they perceive each course component as part of a coherent learning experience aligned with their goals. To evaluate this metric, ask questions like:

- How relevant is this program’s content to your degree? If any topics were not relevant, why not?

- Do you believe this program will help you succeed in your intended career path? Why or why not?

2. Clarity of objectives

To maximize student engagement and success, students must understand the purpose of each lesson and assessment. The best way to discover whether your instructors are making these objectives clear is to ask the students. Review questions to check this metric include:

- Did the lecturer make all the program objectives clear to you at the beginning of the semester?

- Did you understand what knowledge or skills each lecture aimed to build?

- Did you understand the criteria used to evaluate your performance in assessments?

3. Ease of understanding

Some concepts are more advanced than others, but effective learning materials and instruction should enable every student in your class to grasp the content with reasonable effort. The simplest way to evaluate this is to ask students to rate how easy they found it to understand the curriculum content. Allow them to select from:

- Very easy

- Easy

- Moderately challenging

- Difficult

- Very difficult

If the content is technical, it’s all right if a few find it difficult to understand, so long as students who need extra support can access it. But if most of the class found the content difficult or very difficult to understand, you may need to give attention to the clarity of the teaching content or delivery style. If most of the class falls into the easy or moderately challenging categories, depending on the nature of the content, the presentation level can probably be maintained.

4. Components to retain

The next three metrics invite student input about the usefulness of specific program content. It’s valuable to know which elements students feel are most important to keep in the program. Understanding this can help you avoid unhelpful curriculum changes and keep a strong core to build the rest of the program content around. You could ask students:

- Which lectures, chapters, or activities were most useful for achieving the program objectives? Why?

- Which lectures, chapters, or activities most increased your interest in this subject?

5. Components to remove

This metric invites direct student input about program components they found less useful. If many students suggest removing the same component, it’s likely worth exploring whether to do so or how to present that component more effectively. Questions to evaluate this metric include:

- Which lectures, chapters, or activities were the least useful for achieving the program objectives? Why?

- If you could remove one lecture, chapter, or activity from this semester’s curriculum, which would you leave out? Why?

6. Components to add

This metric looks for gaps in the curriculum. While you and your faculty are the subject matter experts, students may have valuable insights about new curriculum components that could enrich the course. While few students will be proactive in suggesting these, a thoughtful prompt in a mid-semester program review can draw out helpful ideas. Sample questions to identify what students feel you should add to the program include:

- What is your top question about this subject matter that this semester’s curriculum has not addressed?

- If you could add one new lecture, chapter, or activity to this semester’s curriculum, what would it cover?

7. Learning thoroughness

This metric overlaps with components the students suggest adding. The aim is to gauge how thoroughly the curriculum helped them understand the subject matter. While no program is exhaustive, students should feel that their lesson content has given them a confident grasp of the knowledge and skills they need to fulfill the program objectives. Here are two ways you could frame a question to check this metric:

- After completing all the lessons, how many of the program topics could you confidently explain to another student?

- On a scale of 1 to 10, how confident do you feel in your understanding of this subject?

- Did the content cover the subject in sufficient detail? If not, which concepts do you think needed further exploration?

8. Material helpfulness

This metric applies to the prepared learning resources you provide to your students, rather than the program curriculum as a whole. In your question, mention specific resources you have provided to help students understand what they’re evaluating. These materials could include:

- Textbooks

- Prescribed journal articles

- Instructional videos

- Practice quizzes

For example, ask them to rate out of 10 how useful the textbook and accompanying videos were for understanding the topics.

9. Assessment readiness

This metric assumes the assessments are relevant to the program outcomes and focuses on how well-prepared for assessment students felt. The aim is to evaluate how cohesive lesson content and activities are with the assessments. This cohesion is essential for student success. Questions to evaluate this metric include:

- Did lectures sufficiently explain the topics that assessments covered?

- Did assessments test all the main topics the lectures explored?

- What assessment topics, if any, did lectures not prepare you enough to handle?

10. Instruction clarity

Instead of the curriculum’s content, the next few metrics focus on delivery. Even with excellent content, a program needs effective delivery for students to succeed. Instruction clarity refers to how easy the teaching is for students to follow. Clear communication about advanced concepts is a skill that comes with practice, so it’s important for lecturers to gauge their own progress based on what students report about this metric. Questions to elicit feedback about instruction clarity include:

- Did the lecturer use terms you could understand when introducing new concepts?

- Did the lecturer structure lessons in a logical way that you could follow from one point to the next?

11. Instruction pacing

While instruction clarity is fundamental, pacing is also important for maintaining student engagement and success. Both overly slow and rushed teaching can hinder student interest, engagement, and performance. Finding the right pace for the class as a whole is one of the more challenging duties of a lecturer, but mid-semester reviews can glean student feedback to help teachers find their rhythm. Questions you may find helpful in evaluating this metric include:

- Does the lecturer usually speak at a pace you can easily understand?

- Does the lecturer spend enough time on each point for you to understand it before moving on to the next idea?

- For you to understand the content and stay focused, should the lecturer continue teaching at their current pace? If not, should the teaching be faster or slower?

12. Instruction method variation

Maximizing student engagement and success involves using a variety of teaching methods. Along with a conventional lecturing approach, teachers should incorporate elements of innovative teaching methods and technologies. For example, project-based and gamified learning can improve comprehension in many students. To learn whether students are engaged or craving more variation in teaching methods, you could ask questions like:

- Does the lecturer present concepts using a variety of tools and methods?

- Does this program include sufficient, too much, or too little teaching method variation to keep you engaged?

13. Lecturer interaction

Students need lessons to move beyond a monologue format. They need opportunities to ask questions and share their ideas. It’s essential for lecturers to create space for these interactions. You can help track their success in creating this space by asking students:

- Does the lecturer invite you to ask questions and share thoughts with them during class?

- How comfortable do you feel interacting with your lecturer during class?

- How comfortable do you feel approaching them with questions after class?

14. Peer interaction

Along with lecturer interaction, students can benefit from collaborating with their peers during lessons and group projects. Along with improving engagement with the content, peer collaboration helps students develop valuable soft skills like communication, teamwork, and leadership. Invite your students to share feedback on the peer interaction opportunities your program offers by asking questions like:

- Did you have opportunities to collaborate with peers to learn or complete a project this semester?

- Did you have the necessary resources and support for these collaborations to be helpful?

- How has this program affected your confidence in collaborating with peers?

15. Impact on interest

While engagement goes beyond interest, students who develop an intrinsic interest in the subject matter will experience greater motivation and often perform better in your program. Effective program design and teaching methods can help many students find a subject more interesting and enjoyable. Gauge whether your program is having this impact by asking students:

- Has your interest level in the subject increased, decreased, or remained unchanged between the beginning of the program and now?

- Which lesson or activity did you find most interesting or enjoyable? Why?

- Which lesson or activity did you find least interesting or enjoyable? Why?

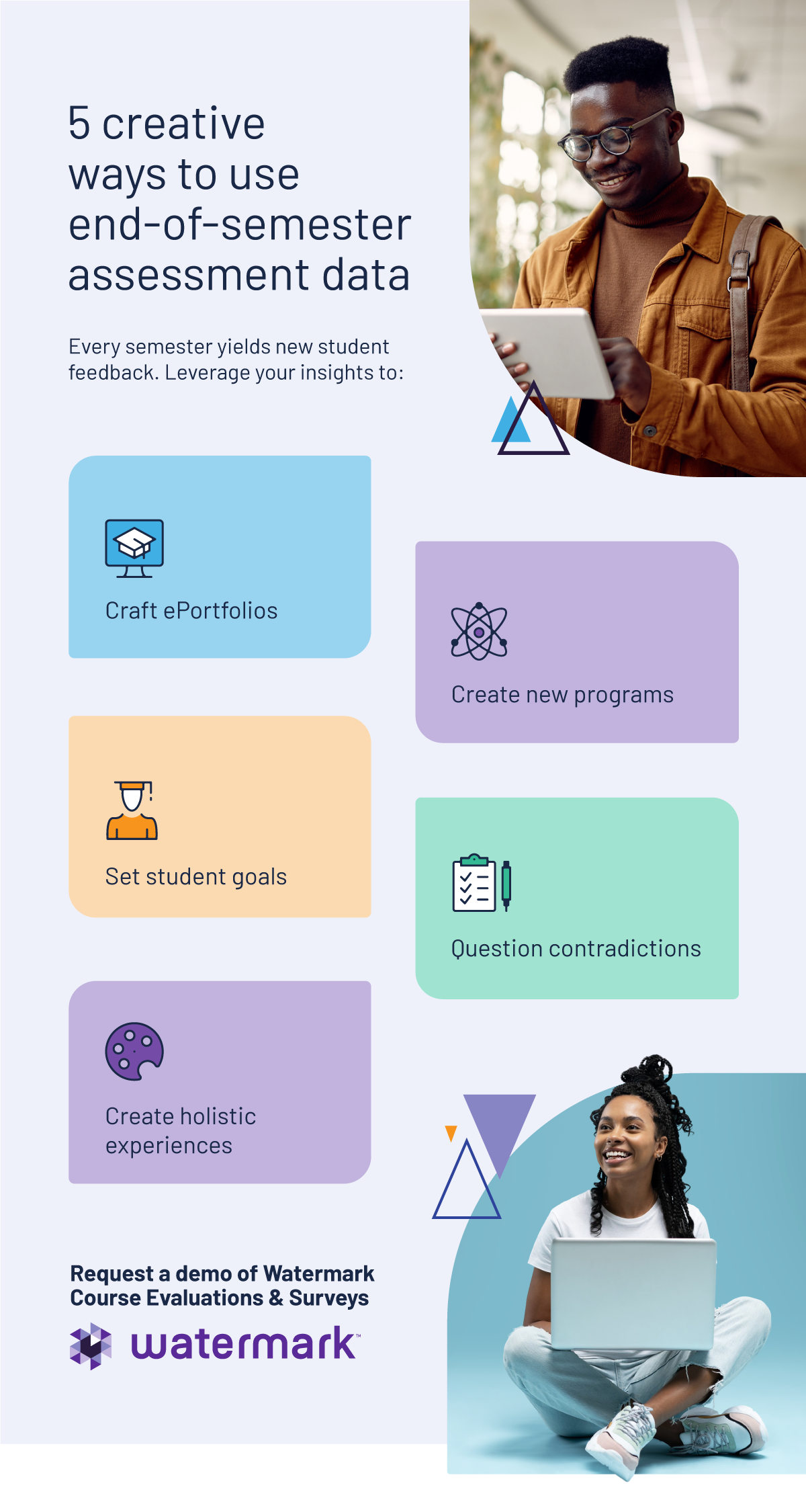

Ensure quality reviews with data insights from Watermark

Maximizing the benefits of program reviews for faculty, student, and institutional success depends on effective data collection and analysis. The Watermark Course Evaluations & Surveys software solution provides everything you need to get better feedback and turn it into actionable insights, including:

- Higher response rates through LMS integration, reminders, and optional grade gating.

- Streamlined implementation with minimal IT involvement.

- Simple, user-friendly survey building.

- Immediate access to results.

- Easy-to-use reporting and presentation functions to distill takeaways.

- AI-powered qualitative analysis.

Request a free demo today for efficient, effective program reviews.